Google’s Gemini AI Features: What to Expect in Android 15

The Made by Google event kicked off with a demonstration of Gemini AI’s features, which will be integrated with Android 15.

The event, where Google introduced new technologies and devices, began with a presentation of Gemini AI’s capabilities. It was announced that Gemini 1.5, built on the company’s new Tensor processors, ranked first in the chatbot benchmark. According to the statement, the new models will make smartphones safer, smarter, and easier to use. These models will be available on Google’s Pixel devices and Android 15 operating systems. Depending on the model, the artificial intelligence can operate on the device or as a device-cloud hybrid. Moreover, Gemini will be able to understand not only what is being said but also the underlying motives, enabling it to reason with users. Here are the models introduced:

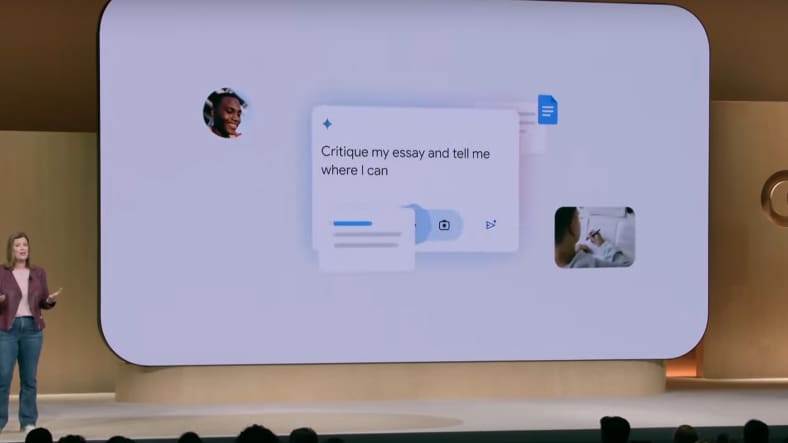

Google announced that the Gemini AI assistant is currently available in over 200 countries. In the new era, Gemini AI will be more responsive on foldable devices and offer various user interfaces. Gemini AI will also be able to use personal information, but this will require users’ permission. For instance, sports activities can be combined with the coach’s search information or nutritional details.

Gemini AI can be used with text, voice, or uploaded images.

Gemini Nano

According to Google’s statement, the Gemini Nano is the first model developed specifically for smartphones and is fully capable of operating on them. This provides significant protection in terms of storing information and data.

Gemini AI, running directly on the device, can review YouTube videos for the user, write emails, and edit lists. Additionally, it can take the initiative based on user requests. Gemini AI is also capable of engaging in different dialogues, such as simulating a job interview.

Gemini Live

While the Gemini Live feature has 10 different voices in the Gemini artificial intelligence model, the most striking feature of the Gemini artificial intelligence is that it speaks naturally. For example, it can give more natural answers to the question “How are you?”. He can also understand intentions from general conversations rather than explicit commands. His speech is also quite fluent compared to previous versions. Nu feature is currently available to paid users and only in English. Gemini Live is available starting today.

Call Notes

This feature is completely phone and keep a record of important information from conversations. The user will be in full control of the feature and the user on the other side will also be notified.

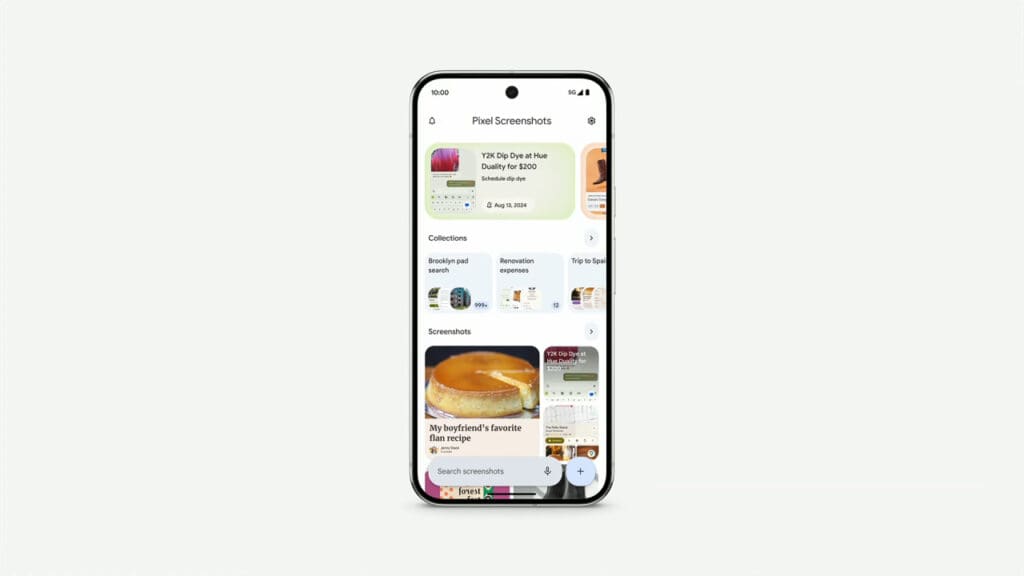

Pixel Screenshop

Pixel Screenshop helps classify, save, or search and buy objects in saved images. This feature will be available on all Pixel 9 models.

Details are coming…